AI technology is a rapidly developing field, presenting many opportunities for companies interested in providing alternative models for supporting their customers. Among the most promising technologies to arise is Model Context Protocol (MCP). Workflow automation tools can use MCP services to, for example, connect an AI powered chatbot to resources such as search indexes, databases, and IoT devices with little development effort. The purpose of this blog is to explore some of the reasons you may want to do this, as well as some of the risks, without getting too technical.

Pushing the Limits of AI

When most people think of AI in a modern context, they are actually thinking about Large Language Models (LLM). LLMs are trained on sets of data over the course of months, or even years. These sets of data are organized and distilled into relationships that can be used for generating responses to questions or finding correlations between individual words or ideas. At its most impressive, it can seem like you are talking to an actual human being. However, there are some limitations intrinsic to this approach that can be mitigated or resolved with tools like Retrieval-augmented generation (RAG) or, as will be shown in the situations below, MCP.

Imagine you want to provide a chatbot to give users the distance between cities. You set up a simple chatbot that is completely dependent on an LLM chat model but you find it provides incorrect answers about 10% of the time. This is because LLMs do not actually "learn", they interpolate data. As such, they are highly susceptible to the old adage, "Garbage in, garbage out". So if you want to improve the quality of AI responses, you might set up an MCP service to be used by an AI for providing the answers directly out of a data store or even calculating the answers based on the geolocation coordinates of the cities.

In another case, let’s consider you want a chatbot to be able to provide year-to-date statistics for baseball teams. This poses a problem for a strictly LLM based solution because LLMs are trained over the course of months or years, meaning they do not have real time information. If you ask an LLM how to build a table, it can probably provide a solid answer. If you ask it who won the World Series this year, you're going to need to give it a tool to access more current information than it was trained on. By connecting the LLM to an MCP service, it can reach beyond the static training data to respond with real-time information.

Now, let’s consider a common business need. Let’s assume I run a boutique hair salon and offer 20 services and 150 different hair care products. I want an AI powered chatbot to integrate those products and services into its responses. The generalized data used when training an LLM is not going to have the context of my store’s current inventory. Creating an MCP service here to connect the AI Agent to a searchable list of my product offering upgrades the AI chatbot from a generic conversational tool into a true customer engagement agent.

So far, we’ve considered instances where MCP can improve the quality of chatbot responses, but what if we want to touch the real world, so to speak? Consider that after the chatbot displays the list of hair conditioners my boutique offers, the customer would like to buy one. An MCP service could offer a function to add a product to the customer’s cart. In this way, MCPs can bridge the divide between the virtual world and the real world.

In many of these examples, you may have noticed the greatest strength of MCP is its interactivity. By creating simple operations in an MCP server, the AI Agent is able to use it flexibly to build ad-hoc contextual information. This results in customer interactions that feel more natural, fluid, and accurate.

What is an MCP server, exactly?

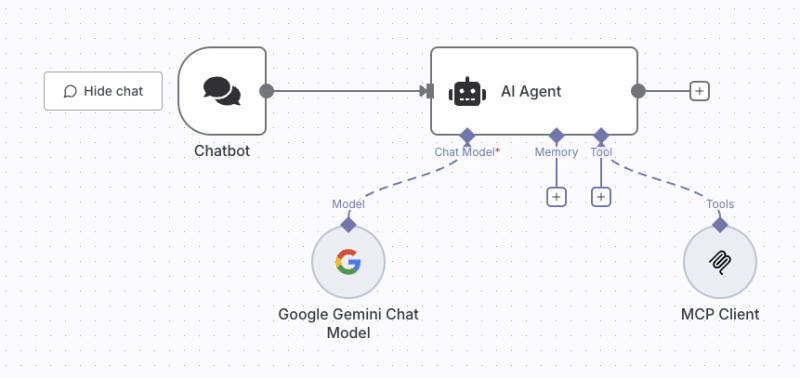

An MCP server is a collection of one or more microservices that generally accomplish simple well-defined tasks. It can either be a web application accessible through the internet or on your local network, or it can be a backend application. An MCP server provides the AI Agent with a list of available tools, each with its purpose, parameters and usage descriptions written in plain language. The agent incorporates these into its contextual understanding, enabling it to call the tools when appropriate. The FindTuner MCP server is one implementation designed specifically to extend product discovery and merchandising capabilities to AI Agents. It exposes FindTuner’s query, filtering, and strategy tools as MCP microservices, so AI workflows can interact with product data just as they would with standard FindTuner features. You can see a greatly simplified version of how an AI Agent connects an LLM and an MCP using an n8n workflow in the image below. Not seen in the image is the AI Agent prompt which is used for providing guidance on what the purpose of the AI Agent is and how it should use the tools it has available.

How the AI Agent uses the MCP Service

Here's where things get a bit fuzzy. AI Agents are not deterministic, meaning you cannot predict exactly how an AI Agent will respond to any given prompt. In fact, it will almost definitely provide a different response if given the exact same prompt twice in a row. This is not to say that it will give an incorrect response and it may relay the same information in slightly different formats, but there will likely be differences in vocabulary and tone. This variance extends to when and how it makes use of MCP services.

A well engineered prompt can drastically improve AI Agent performance. Similarly, well defined descriptions for the MCP microservices can make a huge difference, along with controlling what parameters are required (thereby forcing the AI Agent to provide some value) and which are optional. Deciding on how robust each microservice is can also have a major impact on the reliability and flexibility of the AI Agent interaction. For instance, a microservice might be designed to add a product to a user's cart. It could be as simple as requiring nothing more than the SKU for the product, but then the AI Agent may not be able to correctly process this prompt from a user:

Please add 2 bottles of Shampoo A to my cart.

Alternatively, if the service permits a quantity value to be passed it, the AI Agent might misinterpret the following user interaction and add 0 count of Shampoo A:

I think I might want a bottle of Shampoo A.

In general, predicting how and when an AI Agent will use an MCP server is going to incur a degree of uncertainty. This is not completely unlike working with actual people, though people have far superior reasoning skills. There comes a point where what actually happens is somewhat out of your hands and you have to trust the AI Agent to do the job. However, just as with a new sales associate, providing better tools will tend to improve job performance.

Final Thoughts

MCPs are great for taking an AI-powered chatbot up to the next level. For a relatively small development effort, it can more effectively facilitate customer interaction with a store front or service provider with timely and specific information. Given a well planned MCP toolset, a chatbot can move from being an interesting curiosity on a web page into the realm of becoming a useful assistant for connecting people to your business.